Integrating and Utilizing Ai-MicroCloud’s Managed Services in your Workflows via Zeblok SDK

Seamlessly integrate machine learning workflows with the Zeblok Ai-MicroCloud through the Zeblok SDK, enabling efficient and scalable deployment for enterprise solutions.

This is Part 1 of Exploring the Zeblok-SDK series.

In today's automation-driven landscape, managing resources through web or mobile interfaces can become cumbersome, particularly when utilizing Infrastructure-as-Code (IaaC) to manage machine learning infrastructure. To address the growing need for automation in this area, the Zeblok Ai-MicroCloud offers a Python-based SDK designed to streamline the management of ML infrastructure.

Outline

Available managed services in Ai-MicroCloud

Overview of the Zeblok SDK

Using Zeblok SDK in managing Ai-MicroCloud’s services

Conclusion

Feel free to skip ahead to any section that interests you.

Available managed services in Ai-MicroCloud

Constructing a comprehensive and efficient ML workflow is a complex task, involving numerous interdependent components. These can range from storing and processing large datasets, developing training and inference code, managing experimental results and model artifacts, to deploying models in production. Managing these diverse components can be challenging, especially for small enterprises or teams.

To address this, the Zeblok Ai-MicroCloud offers a broad suite of managed services tailored to the varied needs of ML projects. These services are designed to reduce the operational complexity of ML development, allowing enterprise developers to focus on core tasks. The following sections outline the available managed services.

Ai-WorkStation

Ai-WorkStations provide managed Jupyter Lab environments essential for ML development. Jupyter notebooks streamline experimentation and documentation, integrating all aspects of a data project into a unified platform. The Ai-WorkStation service enhances this with collaborative workstations and domain-specific setups, pre-configured with necessary packages and boilerplate code. Users can also attach object stores to manage and access data, codebases, trained models, and much more.

Ai-MicroService

In the evolving landscape of software development, microservices have become a favored architectural style, enabling developers to create and deploy modular components that are independently scalable and maintainable. The Ai-MicroService offering provides a managed service for deploying containerized microservices with ease. For example, users can deploy containerized components such as data preprocessing services, embedding creation services, or SQL/NoSQL database containers, all managed effortlessly through the platform. Additionally, Ai-MicroService integrates seamlessly with other managed services on the Ai-MicroCloud platform, forming a cohesive ecosystem that supports the entire ML lifecycle.

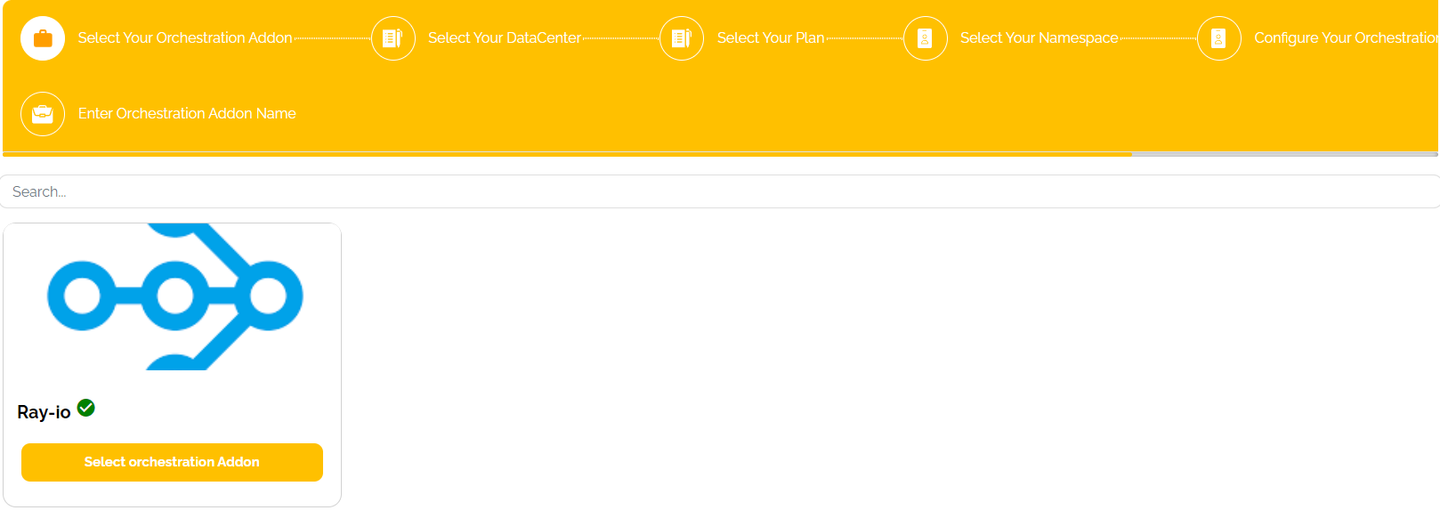

Orchestration-Addons

Training machine learning models, especially those with large datasets and complex model architectures, can be highly resource-intensive. To address this, the Orchestration Add-ons offer a managed Ray-Orchestration service. Users can convert their existing ML training code into Ray-compatible code and run it directly from an Ai-WorkStation, without dealing with the complexities of Ray server management. The platform automates the entire process, including support for automated hyperparameter tuning, which enhances model optimization and performance with minimal manual intervention.

Ai-Pipeline

AI and ML development often require running short-lived jobs such as data preprocessing, feature extraction, or batch inference. These tasks, while critical, can be challenging to manage, especially when specialized infrastructure is needed. The Ai-Pipeline managed service addresses this by allowing users to containerize their workloads and execute them on Ai-Pipeline. This service abstracts the underlying infrastructure, freeing developers from managing resource allocation, scaling, or job scheduling. Whether processing large datasets or performing complex transformations, Ai-Pipeline provides a reliable and scalable solution that integrates seamlessly with other Ai-MicroCloud services.

Ai-API

For developers needing to expose ML models or services as APIs, the Ai-API managed service offers an intuitive and scalable solution. By containerizing your service and deploying it through Ai-API, you create a fully managed API deployment that scales automatically based on demand. This service is ideal for organizations requiring real-time access to models or services, such as for predictive analytics, recommendation engines, or other data-driven applications.

DataLake

Datasets are crucial to any ML workflow, but managing and securing them can be daunting as they grow in size and complexity. The Ai-MicroCloud platform's DataLake service addresses these challenges with managed object storage designed to handle large volumes of data efficiently. Supporting various data types, including structured and unstructured data, DataLake ensures secure and scalable storage. Users can easily attach DataLake to Ai-Workstations or access it via our SDK, facilitating seamless integration with other platform services. Whether storing raw datasets, intermediate processing results, or final model artifacts, DataLake offers a reliable and accessible storage solution.

DataSet

Managing datasets involves not only storage but also version control and accessibility. ML projects often require multiple versions of datasets, reflecting different stages of preprocessing, augmentation, or labeling. The DataSet managed service simplifies this by automatically versioning each upload and providing easy access through our platform. Supporting a wide range of file types, including text, images, blobs, and PDFs, DataSet integrates with other Ai-MicroCloud services, enabling organized and efficient management of datasets within your preferred environment.

Zeblok SDK

Overview

You can install the Python SDK from PyPI or in your terminal type the following command:

pip install zeblok-sdkThrough the SDK, users can perform different actions on the following managed services:

Resource

Plan

Namespace

Managed Service

Microservice

Orchestration-Addons

Ai-API

Ai-Pipeline

DataLake

Using Zeblok SDK in managing Ai-MicroCloud’s services

In the following subsection, we will explore the SDK components and their interfaces, focusing on performing various CRUD operations along with sample code snippets.

Plans

In Ai-MicroCloud, plans define the configuration for compute resources, including CPU/GPU, storage, and memory. These plans can be attached to managed services when they are instantiated. Users can create custom auto-scaling or non-auto-scaling compute plans in different data centers, specifying the necessary storage and memory for various managed services.

While the SDK does not support the creation of new plans, it allows you to retrieve information about all available plans, obtain details for a specific plan using its plan ID, and verify the existence of a plan.

Create a Plan Object

from zeblok.plan import Plan

from zeblok.auth import APIAuth

api_auth = APIAuth(

app_url=f'<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

plan_obj = Plan(api_auth=api_auth)Get All Plans

To retrieve information about all available plans, use the get_all() method on the plan object. This function lists all plans in Ai-MicroCloud, displaying details such as ID, Plan Type, Cost, and Resource Information.

plan_obj.get_all()Get Plan Information by ID

To obtain information about a specific plan, use the get_by_id() method with the plan ID.

plan_obj.get_by_id(plan_id = '<your-plan-id>')Validate Plan by ID

To verify the existence of a plan in Ai-MicroCloud, use the validate_id() method with the plan ID. This method checks if the plan is valid and exists.

plan_obj.validate_id(plan_id = '<your-plan-id>', raise_exception=True)Namespaces

Namespaces are not managed services but resources within Ai-MicroCloud used to logically categorize managed services. When provisioning a managed service, you can assign it to a namespace. Custom namespaces can be created to facilitate collaboration among different users within the same organization.

Although the SDK does not support creating namespaces, it does allow you to manage and validate existing ones. You can retrieve the list of namespaces, obtain detailed information about a specific namespace, and validate if a namespace exists in Ai-MicroCloud.

Create a Namespace Object

from zeblok.namspace import Namespace

from zeblok.auth import APIAuth

api_auth = APIAuth(

app_url='<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

namespace_obj = Namespace(api_auth=api_auth)Get All Namespaces

Retrieve information about all namespaces by calling the get_all() method on the namespace object. This will list all namespaces in Ai-MicroCloud, including details such as ID and name.

namespace_obj.get_all(print_stdout=True)Get Namespace information by ID

To get information about a specific namespace, use the get_by_id() method with the namespace ID.

namespace_obj.get_by_id(namespace_id = '<your-namespace-id>', print_stdout=True

)Validate Namespace by ID

To verify the existence of a namespace, use the validate_id() method with the namespace ID. This method checks if the namespace is valid and exists.

namespace_obj.validate_id(namspace_id = '<your-namespace-id>', raise_exception=True)Explore the Zeblok Ai-MicroCloud with a 21-day free trial. Sign up at Zeblok Playground.

MicroServices

The Ai-MicroService offering enables users to deploy and manage containerized microservices within a unified ecosystem on the Ai-MicroCloud platform, effectively supporting the entire ML lifecycle.

Using the SDK, users can:

Spawn a microservice

Retrieve information about all available microservices

Get details about a specific microservice by ID

Validate the existence of a microservice

Create a MicroService Object

from zeblok.microservice import MicroService

from zeblok.auth import APIAuth

api_auth = APIAuth(

app_url=f'<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

microservice_obj = MicroService(api_auth=api_auth)Get All MicroServices

To retrieve information about all microservices, use the get_all() method on the microservice object. This will list all microservices in Ai-MicroCloud, including details such as ID, microservice name, description, and associated plans.

microservice_obj.get_all(print_stdout=True)Get Microservices Information by ID

To obtain information about a specific microservice, use the get_by_id() method with the microservice ID.

microservice_obj.get_by_id(microservice_id='<your-microservice-id>', print_stdout=True)Validate a MicroService

To verify the existence of a microservice, use the validate_id() method with the microservice ID. This checks if the microservice is valid and exists in Ai-MicroCloud.

microservice_obj.validate_id(microservice_id='<your-microservice-id>', raise_exception=True)Spawn a MicroService

To deploy an existing microservice in Ai-MicroCloud via the SDK, use the spawn() method. Provide the necessary parameters, including display name, microservice ID, plan ID, microservice name, namespace ID, and other configuration details.

microservice_obj.spawn(

display_name='<valid-display-name>',

microservice_id='<valid-microservice-id>',

plan_id='<valid-plan-id>',

microservice_name='<valid-microservice-name>',

namespace_id='<valid-namespace-id>',

ports=[{"protocol": "HTTP", "number": 8501}],

envs= [{"key": "<valid-key>", "value": "<valid-value>"}],

args=[{"key": "valid_key"}],

command="<comma-separate-valid-commands>"

)Orchestration-Addons

Orchestration Add-ons streamline resource-intensive machine learning tasks with a managed Ray-Orchestration service. This enables users to efficiently execute ML training and other workloads on Ray.

Using the SDK, users can:

Spawn an orchestration

Retrieve information about all available orchestrations

Get details of a specific orchestration by ID

Validate if an orchestration exists

Create an Orchestration Object

from zeblok.orchestration import Orchestration

from zeblok.auth import APIAuth

api_auth = APIAuth(

app_url=f'<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

orchestration_obj = Orchestration(api_auth=api_auth)Get All Orchestrations

To retrieve information about all orchestrations, use the get_all() method. This lists all orchestrations in Ai-MicroCloud, including details such as ID, orchestration name, description, and associated plans.

orchestration_obj.get_all(print_stdout=True)Get Orchestration Information by ID

To obtain details about a specific orchestration, use the get_by_id() method with the orchestration ID.

orchestration_obj.get_by_id(orchestration_id='<your-orchestration-id>', print_stdout=True)Validate an Orchestration

To verify if an orchestration exists in Ai-MicroCloud, use the validate_id() method with the orchestration ID.

orchestration_obj.validate_id(orchestration_id='<your-orchestration-id>', raise_exception=True)Spawn an Orchestration

To deploy an existing orchestration in Ai-MicroCloud via the SDK, use the spawn() method. Provide necessary parameters, including orchestration ID, plan ID, namespace ID, orchestration name, and worker configuration.

orchestration_obj.spawn(

orchestration_id='<valid-orchestration-id>',

plan_id='<valid-plan-id>',

namespace_id='<valid-namespace-id>',

orchestration_name="<valid-orchestration-name>",

min_workers=<min-num-workers>, max_workers=<max-num-workers>

)Ai-Pipelines

Ai-Pipeline service simplifies the execution of short-lived jobs by allowing users to run workloads without managing the underlying infrastructure. It offers a scalable solution that seamlessly integrates with Ai-MicroCloud.

Using the SDK, users can:

Create and spawn an Ai-Pipeline

Retrieve information about all existing Ai-Pipelines

Validate if an Ai-Pipeline exists

Create an Ai-Pipeline Object

from zeblok.datalake import DataLake

from zeblok.auth import APIAuth

from zeblok.pipeline import Pipeline

api_auth = APIAuth(

app_url='<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

# While using Azure object store

datalake_obj = DataLake(

api_auth=api_auth,

access_key="<azure-account-name-from-your-UI>/<aws-access-key-id-from-your-UI>/<minio-access-key-id-from-your-UI>",

secret_key="<azure-account-key-from-your-UI>/<aws-secret-access-key-from-your-UI>/<minio-secret-access-key-from-your-UI>"

bucket_name="<your-bucket-name>"

)

ai_pipeline_obj = Pipeline(api_auth=api_auth, datalake=datalake_obj)Get All Ai-Pipelines

To retrieve information about all Ai-Pipelines, use the get_all() method with the state parameter. This lists all Ai-Pipelines in Ai-MicroCloud with their respective states.

# Get all pipelines in the 'ready' state

ai_pipeline_obj.get_all(state="ready", print_stdout=True)

# Get all pipelines in the 'created' state

ai_pipeline_obj.get_all(state="created", print_stdout=True)Validate an Ai-Pipeline

To verify if an Ai-Pipeline exists in Ai-MicroCloud, use the validate() method with the Ai-Pipeline image name and state.

ai_pipeline_obj.validate(image_name="<image-name>", state="<valid-state>")Spawn an Ai-Pipeline

Ai-Pipeline provides two methods for deployment:

Create and Spawn in One Call: To create and deploy an Ai-Pipeline in a single step, use the

create_and_spawn()method.ai_pipeline_obj.create_and_spawn( ai_pipeline_name="<valid-ai-pipeline-name>", ai_pipeline_folder_path='<valid-pipeline-folder-path>', caas_plan_id='<valid-caas-plan-id>', ai_pipeline_plan_id='<valid-pipeline-plan-id>', namespace_id='<valid-namespace-id>' )Create Once and Spawn Multiple Times: First, create an Ai-Pipeline using the

create()method. Then, spawn it multiple times as needed usingspawn()method.# Create the pipeline ai_pipeline_image_name = ai_pipeline_obj.create( ai_pipeline_name="<valid-ai-pipeline-name>", ai_pipeline_folder_path='<valid-pipeline-folder-path>', caas_plan_id='<valid-caas-plan-id>', ai_pipeline_plan_id='<valid-pipeline-plan-id>', namespace_id='<valid-namespace-id>' ) # Spawn the pipeline multiple times # First time ai_pipeline_obj.spawn( ai_pipeline_image_name=ai_pipeline_image_name, ai_pipeline_plan_id='<valid-plan-id-1>', namespace_id='<valid-namespace-id-1>' ) # Second time ai_pipeline_obj.spawn( ai_pipeline_image_name=ai_pipeline_image_name, ai_pipeline_plan_id='<valid-plan-id-2>', namespace_id='<valid-namespace-id-2>' )

Ai-API

Ai-API managed service enables developers to deploy and scale their ML models or services as APIs, providing real-time access and automated scalability for various applications.

Using the SDK, users can:

Create and spawn an Ai-API

Retrieve information about all existing Ai-APIs

Validate if an Ai-API exists

Create an Ai-API Object

from zeblok.datalake import DataLake

from zeblok.auth import APIAuth

from zeblok.api import API

api_auth = APIAuth(

app_url='<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

# While using Azure object store

datalake_obj = DataLake(

api_auth=api_auth,

access_key="<azure-account-name-from-your-UI>/<aws-access-key-id-from-your-UI>/<minio-access-key-id-from-your-UI>",

secret_key="<azure-account-key-from-your-UI>/<aws-secret-access-key-from-your-UI>/<minio-secret-access-key-from-your-UI>"

bucket_name="<your-bucket-name>"

)

ai_api_obj = API(api_auth=api_auth, datalake=datalake_obj)Get All Ai-APIs

To retrieve information about all Ai-APIs, use the get_all() method with the state parameter. This lists all Ai-APIs in Ai-MicroCloud with their respective states.

# Get all APIs in the 'ready' state

ai_api_obj.get_all(state="ready", print_stdout=True)

# Get all APIs in the 'deployed' state

ai_api_obj.get_all(state="deployed", print_stdout=True)

Validate an Ai-API

To verify if an Ai-API exists in Ai-MicroCloud, use the validate() method with the Ai-API image name and state.

ai_api_obj.validate(image_name="<image-name>", state="<valid-state>")Spawn an Ai-API

Ai-API offers two deployment methods:

Create and Spawn in One Call: To create and deploy an Ai-API in a single step, use the

create_and_spawn()method.ai_api_obj.create_and_spawn( ai_api_name='<valid-ai-api-name>', model_folder_path='<valid-model-folder-path>', plan_id='<valid-plan-id>', namespace_id='<valid-namespace-id>', ai_api_type='<valid-ai-api-type>' )Create Once and Spawn Multiple Times: First, create an Ai-API using the

create()method. Then, spawn it multiple times as needed.# Create the API ai_api_image_name = ai_api_obj.create( ai_api_name='<valid-ai-api-name>', model_folder_path='<valid-model-folder-path>', ai_api_type='<valid-ai-api-type>' ) # Spawn the API multiple times # First time ai_api_obj.spawn( image_name=ai_api_image_name, plan_id='<valid-plan-id-1>', namespace_id='<valid-namespace-id-1>' ) # Second time ai_api_obj.spawn( image_name=ai_api_image_name, plan_id='<valid-plan-id-2>', namespace_id='<valid-namespace-id-2>' )

DataLake

DataLake service on Ai-MicroCloud provides secure, scalable object storage for managing large datasets. It integrates seamlessly with Ai-Workstations or can be accessed via SDK, ensuring reliable access to all data types throughout the ML workflow.

Using the SDK, users can:

Upload a file or folder

Download a file

Get a presigned download URL for an object

Create a DataLake Object

from zeblok.datalake import DataLake

from zeblok.auth import APIAuth

api_auth = APIAuth(

app_url=f'<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

datalake_obj = DataLake(

api_auth=api_auth,

access_key="<azure-account-name-from-your-UI>/<aws-access-key-id-from-your-UI>/<minio-access-key-id-from-your-UI>",

secret_key="<azure-account-key-from-your-UI>/<aws-secret-access-key-from-your-UI>/<minio-secret-access-key-from-your-UI>"

bucket_name="<your-bucket-name>"

)Upload a File or Folder

To upload a file or folder, use the upload_object or upload_folder methods.

# Upload a file

datalake_obj.upload_object(local_file_pathname="<local-filepath-name>", object_name="<object-name>")

# Upload a folder

datalake_obj.upload_folder(folder_path="<local-folder-path>")The relative paths are preserved in the object storage systems.

Download a File

To download a file, use the download_object method.

datalake_obj.download_object(object_name="<object-name>", local_dir="<local-dir>", filename="filename")Object is downloaded in the respective local directory and saved with the given filename.

Get a PreSigned download URL

To create a presigned URL for downloading an object, use the get_presigned_url method.

datalake_obj.get_presigned_url(object_name="<object-name>")DataSet

DataSet managed service enhances dataset management by automatically versioning uploads and ensuring seamless access to various file types. This service integrates smoothly with Ai-MicroCloud, promoting a more organized and efficient ML workflow.

Using the SDK, users can:

List all datasets in Ai-MicroCloud

Retrieve information about a specific dataset by name

Create a new dataset

Upload files to the dataset

Using the SDK, we can list all the datasets in your Ai-MicroCloud, get a dataset's informatation based on the dataset-name, create a new dataset, and upload files in the respective dataset.

Create a DataSet object

from zeblok.datalake import DataLake

from zeblok.auth import APIAuth

api_auth = APIAuth(

app_url=f'<your-base-url>',

api_access_key='<your-api-access-key-from-Web-UI>',

api_access_secret='<your-api-access-secret-from-Web-UI>',

)

dataset_obj = DataLake(api_auth=api_auth)Get All DataSets

To list all datasets in Ai-MicroCloud, use the get_all() method on the dataset object.

dataset_obj.get_all(print_stdout: bool = True)Get DataSet by Name

To retrieve information about a specific dataset, use the get_by_name() method with the dataset name.

dataset_obj.get_by_name(dataset_name="<dataset-name>")Create a New DataSet

A DataSet entity consists of different versions of uploaded files. To create a new dataset, use the create_dataset() method.

dataset_obj.create_dataset(dataset_name="<dataset-name>", dataset_description="<multiline-dataset-description>")Create DataSet Versions

Versioning datasets is crucial for tracking ongoing changes. Upload files to the dataset service, which will automatically handle versioning.

dataset_obj.upload_dataset(dataset_id="<dataset-id-from-create-dataset-fn>", filepaths=["<filepaths-of-files-to-be-uploaded>"])Conclusion

The first installment of the "Exploring the Zeblok-SDK Series" presented the Zeblok SDK, a robust tool designed to streamline and enhance machine learning workflows on the Zeblok Ai-MicroCloud platform. This SDK enables developers to efficiently develop, deploy, and scale their ML models, while reducing operational complexity. Having covered the key components of the Zeblok SDK, future sections of this series will delve deeper into practical strategies for integrating the SDK into your daily AI and ML workflows.